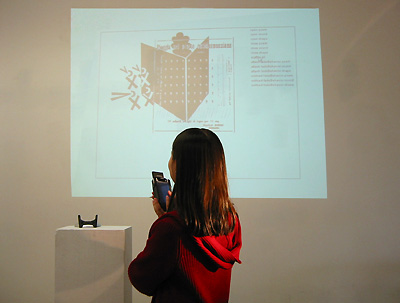

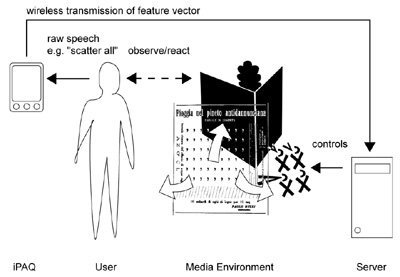

Linking Speech Recognition with the Media Environment the documentation Quicktime video clip of this installation can be downloaded in different sizes:

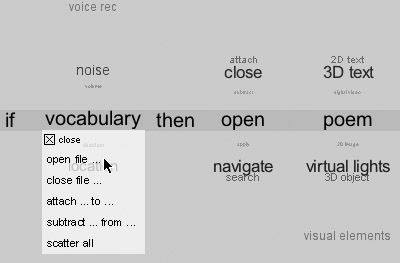

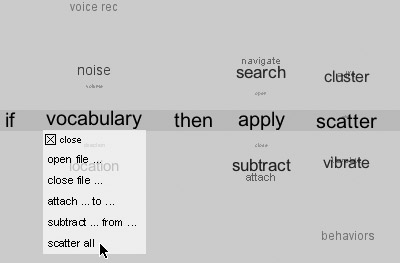

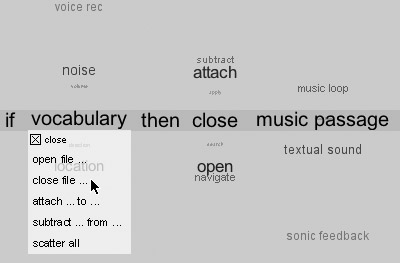

The following simplified screenshots show how we used the EIM to author the feedback of the media environment to the spoken commands:

The above screenshot shows that the command "open poem" recognized by the voice recognition (in the EIM's IF part) is linked to an action in which a poem from the database is opened in the media environment (shown in the EIM's THEN part).

The above screenshot shows how the "scatter all" command/reaction is programmed.

And here we see the "close sound" line in the EIM. back to menu |