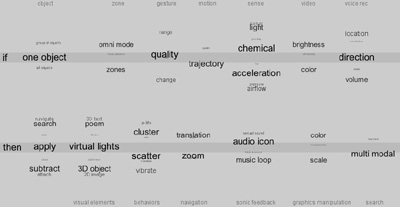

Object Based Emergent Intention Matrix: EIM The Emergent Intention Matrix will be an intuitive authoring tool that will enable multiple streams of sense data to be linked to a generative virtual environment (or to other computer-articulated environments, i.e. robotic, alternate networked environments, and/or ubiquitous computing environments), as driven by this poly-sensing data. This environment will enable the behavior of object, person or chemical event in the space to become an interface to diverse imaging, media-behaviors,sonic feedback and/or other affectors in the linked virtual space and/or computer-articulated environment. Such a space can also be intelligently linked to other related spaces via the internet. Instructions: Please click here to open a first simulation of the EIM user interface (Shockwave Movie, 130K). Please click on one item and drag with the mouse in order to make a choice. So far the following items have submenus that you can access by double-clicking the item:

back to menu |